Taming the Wild West: Applying Software Engineering Principles to Web Analytics

Current State

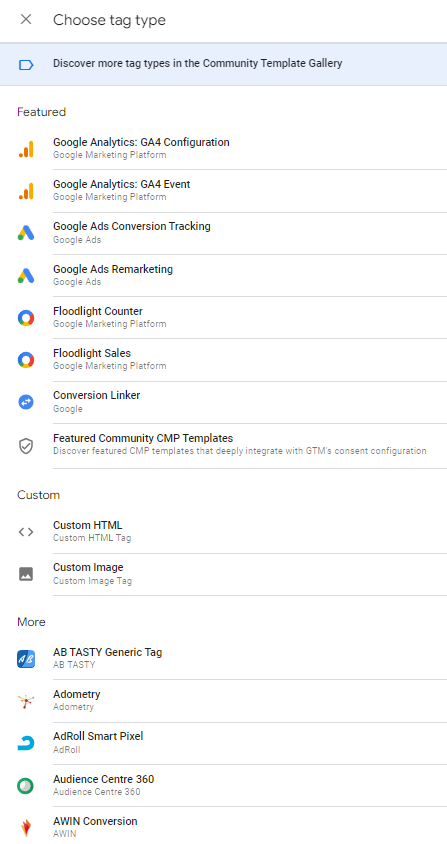

The world of web analytics often resembles the wild west with businesses eagerly tracking everything without fully considering the holistic context and purpose of data collection.The advent of tag management systems like Google Tag Manager (GTM) has further accelerated this process with marketing teams now able to swiftly deploy code snippets onto websites which unlocks numerous opportunities and benefits.

However this convenience does come at a cost as it unintentionally introduces disorder and chaos due to the lack of governance around data collection. This lack of governance arises because of the newfound ability to inadvertently introduce tracking code snippets into a production environment without a thorough understanding of their potential impact. This could have unexpected impacts such as accidental introduction of malicious code, diminished website performance or even complete website breakdowns in the worst-case scenarios.

As Uncle Ben once wisely said: “with great power comes great responsibility.” This adage holds true for web analytics and perhaps lessons can be borrowed from the field of software engineering to bring much-needed structure to this seemingly unrelated domain. By applying well-established principles, hopefully we can foster a more structured and purposeful approach to data collection and thereby web analytics.

Don’t Reinvent the Wheel

One of the fundamental principles of software engineering is to avoid reinventing the wheel. Similarly in web analytics, there are often existing solutions available that can be leveraged instead of creating custom implementations from scratch. By utilizing standardized tags and built-in / community templates businesses can save time and effort while ensuring consistency and accuracy in their data collection processes.

Rather than developing unique tags for every tracking scenario, it is beneficial to create a repository of reusable tags, triggers, and variables or even build a new custom template and share it with the community. This approach not only saves development time but also enables businesses to maintain a cohesive and standardized tracking methodology across various projects.

Write Good, Not Shitty Code

Web analytics code is no different from production code as it can directly impact a website’s functionality. Implementing poorly written or erroneous tags can lead to website malfunctions or even complete breakdowns, negatively affecting the end user’s experience.

Do you recognise what the following is? It is one of the checkout steps for a ecommerce brand where there was a bad tag in GTM which essentially reset all cookies on the page and hence broke the rendering of the page. Such incidents precisely enforce the fact that Web analytics code is equivalent to production code.

Some high-level coding principles can also be abstracted and applied to your everyday analytics implementations:

KISS Principle: Over-Engineered Solutions

In an attempt to maximize the capabilities of tag management systems, it is common to try to create overly complex and over-engineered solutions. The KISS principle stands for “Keep It Simple, Stupid” but I feel that is a bit rude so I prefer "Keep It Simple, Silly".This principle encourages simplicity and elegance in code design. By focusing on essential tracking requirements and avoiding unnecessary complexity, businesses can ensure their analytics implementations are more manageable, scalable and easier to maintain.

DRY Principle

The "Don't Repeat Yourself" (DRY) principle is highly applicable to web analytics implementation. Duplicate tags, triggers, or variables across different containers or pages with common functionality can lead to inconsistencies and maintenance challenges. By identifying patterns and creating reusable elements within your tagging solutions, businesses can reduce redundancy, improve consistency and simplify the management of their analytics implementation.Single Responsibility Principle / Separation of Concerns

The Single Responsibility and Separation of Concerns principles both promote modular and maintainable web analytics implementations.The Single Responsibility Principle suggests that a class (or in the case of Web Analytics an element such as a tag) should have a single responsibility or purpose and that responsibility should be encapsulated within the class.

Similarly the Separation of Concerns is a software design principle that advocates for dividing a system into distinct and self-contained parts where each part addresses a specific concern or responsibility and ensuring that each part focuses on a single concern without unnecessary dependencies or overlapping responsibilities.

Following the above mantra, tags and triggers should be defined with clear and distinct purposes to allow for easier management and updating individual tags should not impact other tags in the analytics implementation. This approach helps simplify the troubleshooting process when debugging for tracking issues as issues can be quickly isolated to specific tags and triggers.

Test & Document Everything

There should always be documentation about your tracking implementation.GitLab has a very good overview on the importance of documentation within an organisation (in-general) which I highly recommend reading.

Documentation should cover configurations of tags, triggering events and clearly articulate how certain events are triggered and tracked on a site / app and what the downstream event would look like once it has been captured (i.e event_name and associated parameters).

Documentation does not require any specific tool or platform and even an external spreadsheet will do the job - just remember to keep it updated!

Comprehensive testing is also vital for both software engineering and web analytics, and should always include pre-deployment testing as well as ad hoc testing when making changes to live environments. To finish off and emphasize the importance of testing in the production environment after a new deployment, I leave you with the following well-known story around unaccounted edge cases:

A software tester walks into a bar.

And orders:

a beer.

3 beers.

0 beers.

99999999 beers.

a lizard in a beer glass.

-1 beer.

"random" beers.

Testing complete.

A real customer walks into the bar…and asks where the bathroom is.

The bar goes up in flames

* This post is adapted from a talk I gave at MeasureCamp Copenhagen 2023. Original slides can be found here